Philosopher Nick Bostrom now asks: What if AI fixes everything?

Championed by the likes of Bill Gates, Sam Altman and Elon Musk for over a decade, Nick Bostrom has been one of the biggest voices in AI since he founded the Future of Humanity Institute at Oxford University in 2005. But he hasn’t always seen eye-to-eye with the emerging technology.

Superintelligence

When Nick published Superintelligence in 2014, he introduced what was then a fringe theory: What if AI could become smarter than humanity and turn against us?

Even people in AI research at the time thought this future was a long way off, if it was possible at all. But with the rapid advancement of generative AI over the past few years, Artificial General Intelligence, or AGI, has become a question of ‘when’, not ‘if’. Elon Musk even predicted earlier this year that we will see AI smarter than any human as soon as 2026.

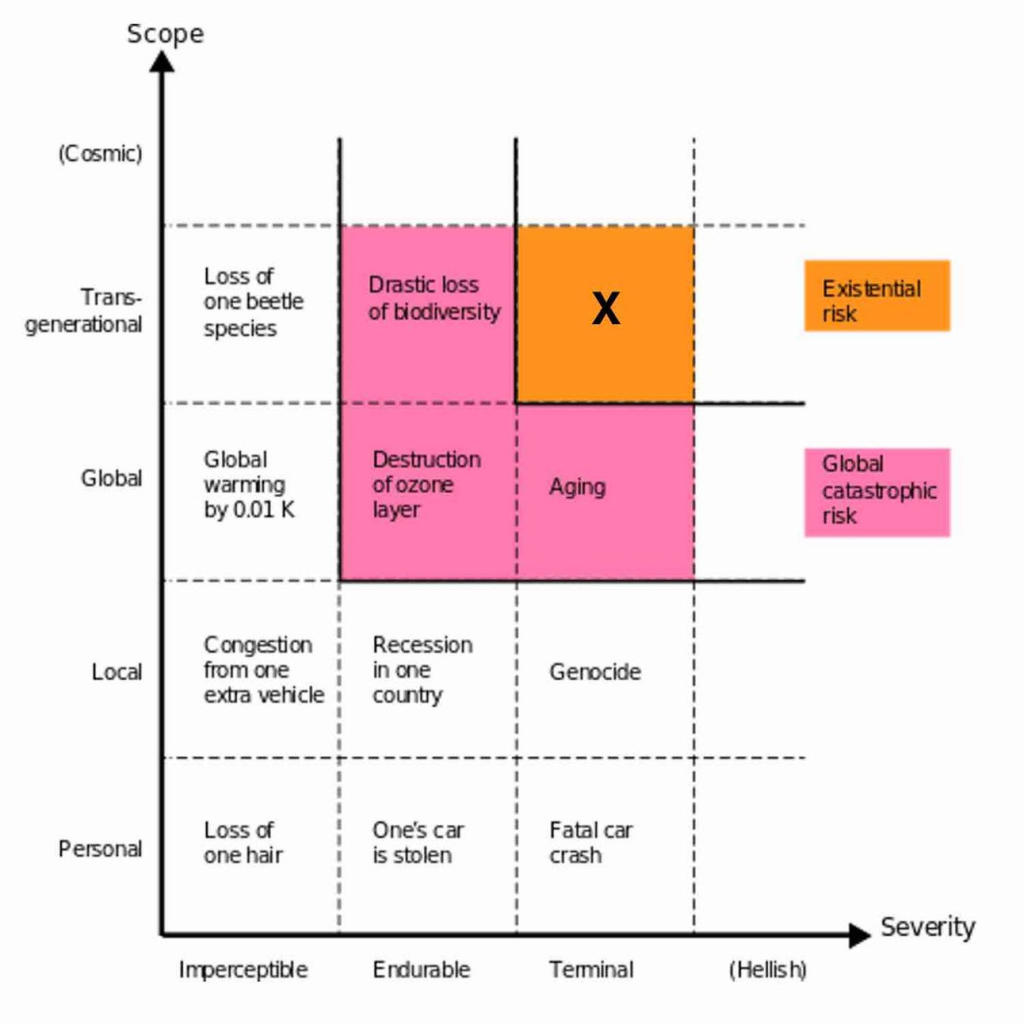

In Superintelligence, Nick stressed the potential existential risks posed by AGI — “We’re like children playing with a bomb.” He outlined the “AI control problem,” which would require its own version of Asimov’s Laws of Robotics to prevent AI from acting against human interests and resisting human intervention.

He followed it up with The Vulnerable World Hypothesis in 2019, which described how there may be some technologies that would destroy human civilization by default if discovered (including AI-linked threats).

Things started to change in 2020. Nick co-authored a new paper, Sharing the World with Digital Minds, that began to explore the benefits of superintelligent AI. He described the potential for an AI, called a “super-beneficiary”, that is more sentient than humans, experiencing a higher rate and intensity of subjective experience with fewer resources. As Nick says, “such beings could contribute immense value to the world.”

Now, Nick’s written a new book, Deep Utopia: Life and Meaning in a Solved World. It considers what a positive AI future might look like — pros and cons.

Here are the 3 questions he’s asking now:

Where do we find value?

If AI solves all our problems and performs all of our labor, what will we find value in?

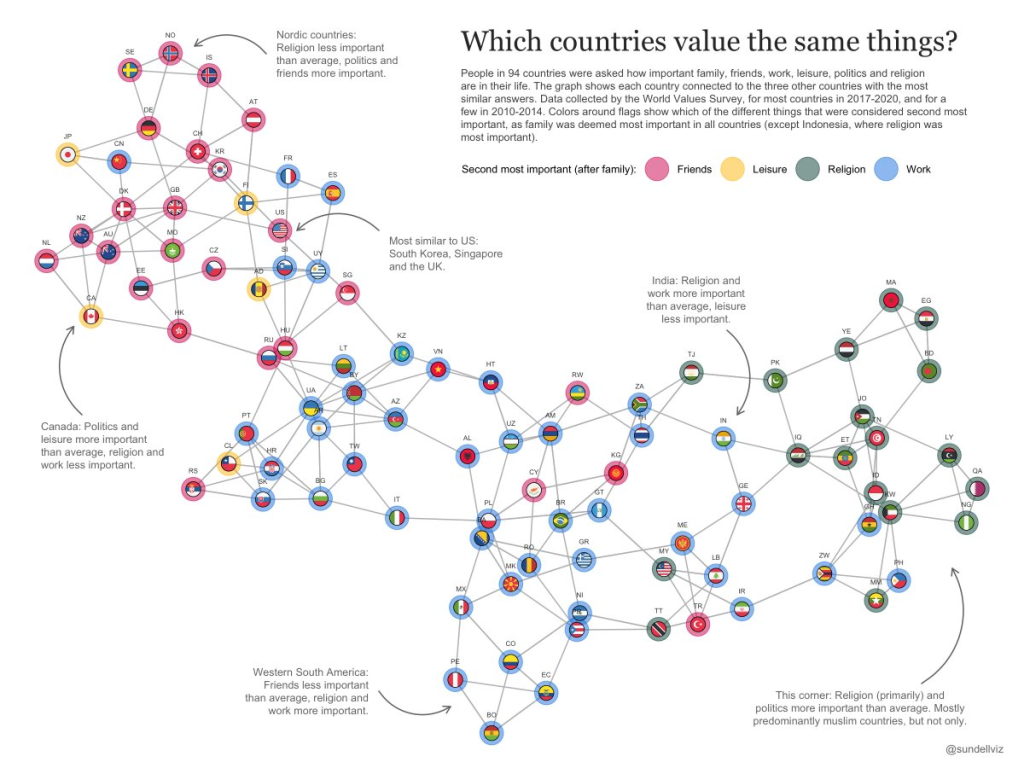

Outside of our relationships, most cultures value work more than leisure, religion, and politics. Some cultures even value work above friendship, including China, France, and India. And as much as some people might hate their jobs, humans inherently like to work — as evidenced by everything from the thousands of people who contribute to open-source platforms like Wikipedia and GitHub to the folks over at Build The Earth who have been recreating every detail of our planet on Minecraft for the past 4 years.

AI is also looking to play a part in our leisure and relationships, too. AI image- and video-generating tools can be seen as part of this; so can Bumble Founder Whitney Wolfe Herd’s plan for “dating-by-proxy” via AI clones.

When we stop being able to contribute to society, what will we do?

What does sentience mean?

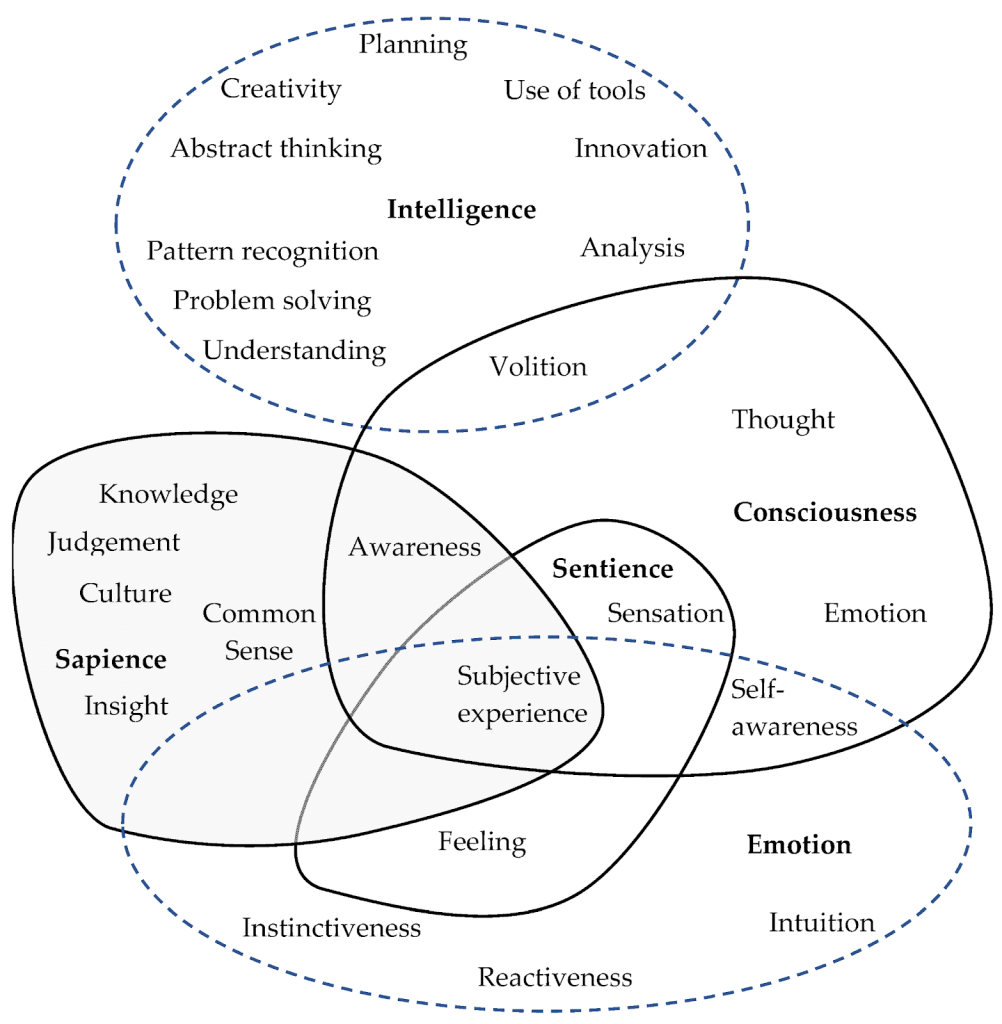

When we achieve AGI, we’ll need to re-work our definition of sentience. One of the challenges with this is that our definition of sentience is patchy to begin with. Often used interchangeably with concepts like sapience, consciousness, self-awareness, qualia, and intentionality, the actual qualifier of sentience is simply any conscious awareness of stimuli.

Nick equates sentience with feeling. “My view is that sentience, or the ability to suffer, would be a sufficient condition for an AI system to have moral status.”

It’s going to be difficult to measure whether AI is sentient, let alone sapient or self-aware. The scientific community hasn’t even reached a consensus on fish. But with AI researchers like Blake Lemoine already proposing that AI models possess sentience — a position Nick has expressed sympathy, if not outright endorsement, for — the debate is only going to take off from here.

Should we aim for AGI at all?

Despite the uncertainties, Nick is optimistic about AGI. He describes how, without striving for AGI, we’d be permanently “confined to being apes in need and poverty and disease.”

As Nick says, “Superintelligence is the last invention that humans will ever need to make.” His focus now is on making sure we don’t end up in the “paperclip maximizer” scenario.

“Suppose we have an AI whose only goal is to make as many paper clips as possible. The AI will realize quickly that it would be much better if there were no humans because humans might decide to switch it off. Because if humans do so, there would be fewer paper clips … The future that the AI would be trying to gear towards would be one in which there were a lot of paper clips but no humans.”

Nick Bostrom, 2014

Basically, if we develop AGI responsibly, with the right rules and stopgaps in place to protect human interests, it will have a net-positive influence on society. This is because a post-scarcity world might also be post-instrumental — meaning the interests and decision-making of different intelligent beings might begin to diverge where they currently align.

But again, Nick thinks these risks can be mitigated with the right preparation.

“Ultimately, I’m optimistic about what the outcome could be,” he says.

W88vt, eh? Okay, checking you out. Need a reliable spot to place some bets and hopefully hit a jackpot. Seeing is believing! Gonna give w88vt a try and see if it’s worth the hype!

Heard some whispers about r7bet1. Decent selection of games, from what I saw. Nothing blew my mind, but it’s okay. Take a peek at r7bet1.

Yo 56jllogin! Quick and easy login process. Easy navigation, and the games are super fun. I like the quick payouts from this site, too Check it out See 56jllogin for yourself.