The companies pioneering “boundedness” to tackle AI’s trust issue.

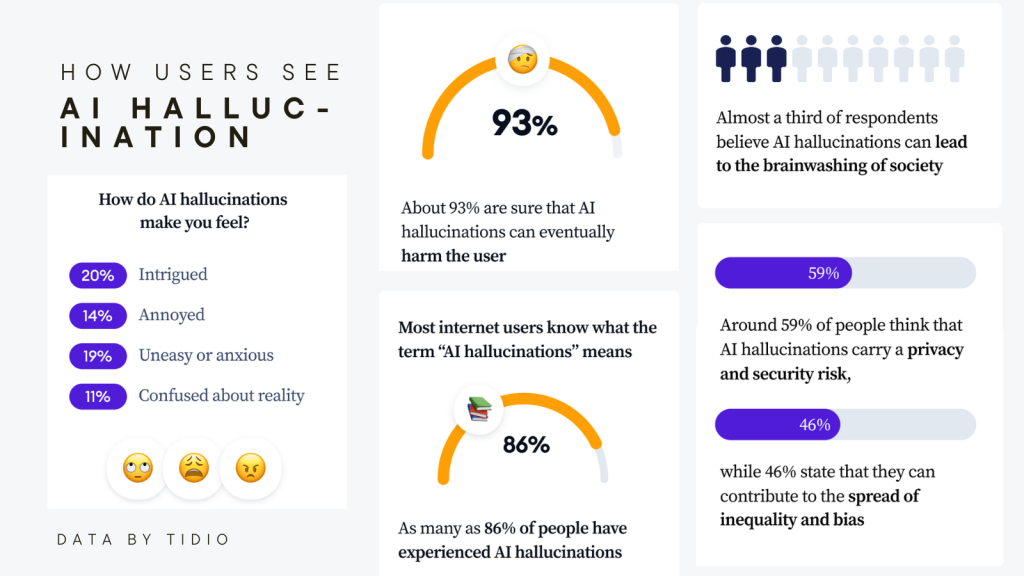

We’ve all seen the (often hilarious) results of AI hallucinating.

Like when GPT kept insisting it was in love with that New York Times reporter, or when Google started telling people to eat glue. One lawyer has even been disbarred due to relying on what turned out to be misinformation from a generative AI model.

There’s even a blatant factual error in this official promo for Bard (see if you can spot it.)

That’s where AI boundedness comes in.

The basic principle of AI boundedness? Specialized beats general purpose, any day of the week.

Models like GPT-4 are trained on more data than a human could ever know, with the ambition of being able to answer any question, solve any problem and respond to any input that a user might wish to give it. But bounded AI is specialized, an alternative to models like GPT-4 that serves just one purpose.

Conjecture AI CEO Connor Leahy describes general-purpose AI as “some blob that you tell to do things and then it runs off and does things for you.” That might sound great, but Leahy takes issue with the lack of transparency and clarity of reasoning provided by these closed-source models.

Conjecture AI is breaking down AI systems into separate processes that can then be combined by the user to achieve a complex goal. Leahy’s main focus? That no one part of the system is more powerful than the human brain.

And his is far from the only company working towards bounded AI — other AI experts share his concerns.

“If I’m using AI to recommend shoes to display [and] the model is 85% accurate, that’s brilliant — a paradigm shift better than humans could do at scale. But if you’re landing airplanes, 85% ain’t no good,” says Ken Cassar, CEO of AI startup Umnai.

It’s not just the experts who are concerned; the general public is starting to feel the effects of AI hallucination and opaque processes, too. And a public distrust of AI is bad for commercial models, and the long-term viability of the technology as a whole.

Meet three of the big players backing bounded AI.

1 – Transparency: Meta’s Llama 2

Llama 2 ranks particularly highly on the 2023 Foundation Model Transparency Index. Meta provides clear insights into how their algorithms operate, and the model is free, open-source, and adaptable.

Models like Llama 2 help to demystify AI decision-making and promote accountability.

2 – Reliability: Aligned AI

Aligned AI prides itself on being “the world’s most advanced alignment platform for AI.” It provides tools to measure bias in foundation models and allows users to give live feedback to the program as it makes decisions.

Aligned AI also promises a way to help AI extrapolate new concepts from its training data and be self-improving where other systems degrade over time.

3 – Democratization: Hugging Face

Hugging Face calls itself “The Home of Machine Learning” — and with good reason. The community-driven platform is used by more than 50,000 organizations, including Meta and Google, and millions of individuals active in machine learning research and innovation.

But we’re far from a consensus on AI boundedness. Washington Post AI columnist Josh Tyrangiel recently wrote an article titled “Honestly, I love when AI hallucinates”, and he’s in good company.

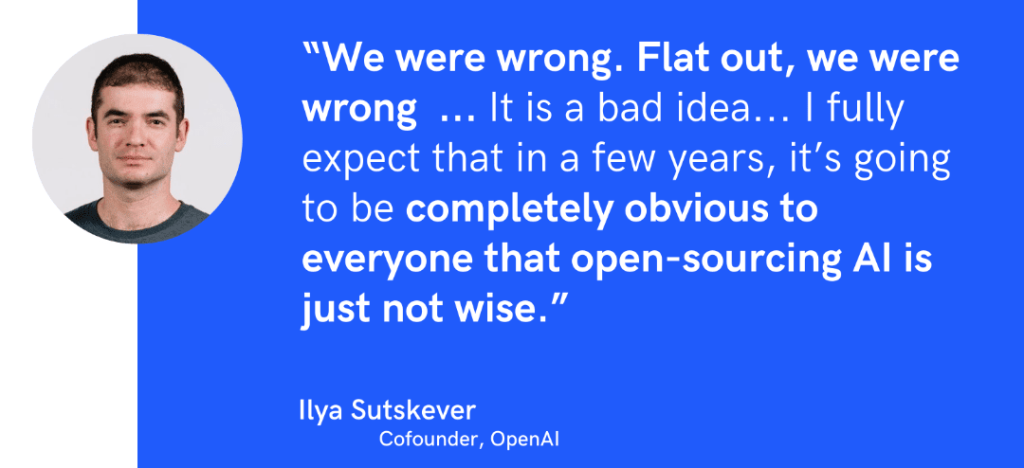

Most major AI models aren’t open-source, including OpenAI’s GPT-4, Google’s Gemini, the image models Dall-E and Midjourney, and Nvidia’s Jarvis. They often cite concerns related to security, intellectual property, and commercial interests when asked about open sourcing.

Open sourcing also limits the incentive for companies to invest in cutting-edge research and new models.

So, what’s the takeaway?

With AI boundedness, I think the key is plurality. As Meta VP Ahmad Al-Dahle said, about the launch of Llama 2:

“This benefits the entire AI community and gives people options to go with closed-source approaches or open-source approaches for whatever suits their particular application.”

The flaws of current AI are more likely to be solved with as many people working on it – and from as many different angles – as possible. And plurality brings competition, which breeds innovation.

As long as we’re realistic with ourselves about the limitations of current GenAI models, there’s nothing to be feared and so much to be gained from AI — bounded or otherwise.

Onebragames, fala sério, que plataforma show! A variedade de jogos é insana, tem pra todos os gostos. E o melhor, os saques são rápidos e sem complicação. Recomendo demais! Confere lá: onebragames.

Signed up for 188betvvip recently, and the VIP perks are actually worth it. It’s a class act. See for yourself at 188betvvip.

Heard about vuagaazad and gave it a shot. The games are decent and it’s a fairly user-friendly platform. Might become a regular here! Give it a whirl. vuagaazad