Peter Thiel’s AI predictions – 10 years later.

Among the kingpins of tech, Thiel was one of the most vocal about AI in the 2010s. He had strong convictions – and he put his money where his mouth is.

He backed OpenAI (back when its mission was “to benefit humanity as a whole, unconstrained by a need to generate financial return.” Huh.) And he was an early backer of DeepMind, back when it was a fresh-faced British startup.

Here are Thiel’s 5 biggest predictions – and how they hold up.

1 – AI is political first, economic second

In a 2014 Reddit AMA, Thiel made it clear that, for him, the social and political aspects of AI come first. He compared it to aliens landing – we would want to know if they were friendly first, then start thinking about economic benefits.

We see this in his early backing of OpenAI and its initial mission of ensuring AI is developed by people with good intentions. In some ways, this has drifted from general concern.

But there are plenty of contradictions in that debate, too.

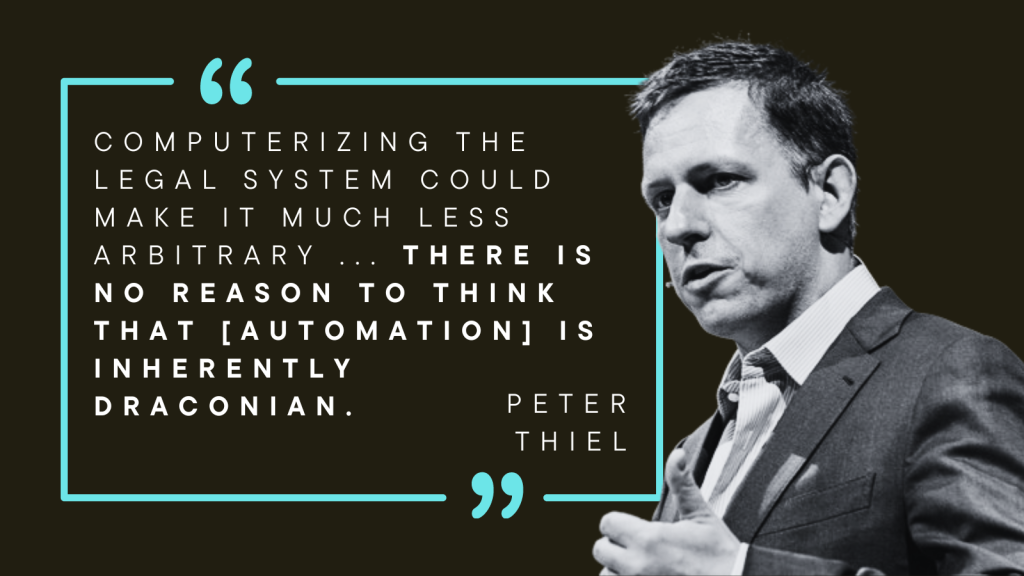

2 – AI is safe

In 2014, echoing the fears of Alan Turing 60 years prior, the likes of Stephen Hawking and Elon Musk were expressing concern over AI. But Thiel sat somewhere between neutral curiosity and techno-optimism.

He joked that, after a 2012 meeting with DeepMind CEO Demis Hassabis, a colleague said “he felt it was his last chance to get to shoot” the CEO and put an end to AI.

In a lecture, he asked big questions about the future of AI in the context of “The Strangeness of AI” – aka the source of our fears. And he suggested the potential benefits of AI for transparency and social order.

3 – AI is more important than climate change

In 2014, Thiel said, “People are spending way too much time thinking about climate change, way too little thinking about AI.”

He was talking about existential risk.

But if he thinks AI isn’t something to fear, what did he mean? Well, optimism isn’t the same as naivety, and Thiel’s always recognized the importance of investing in the future you want to see.

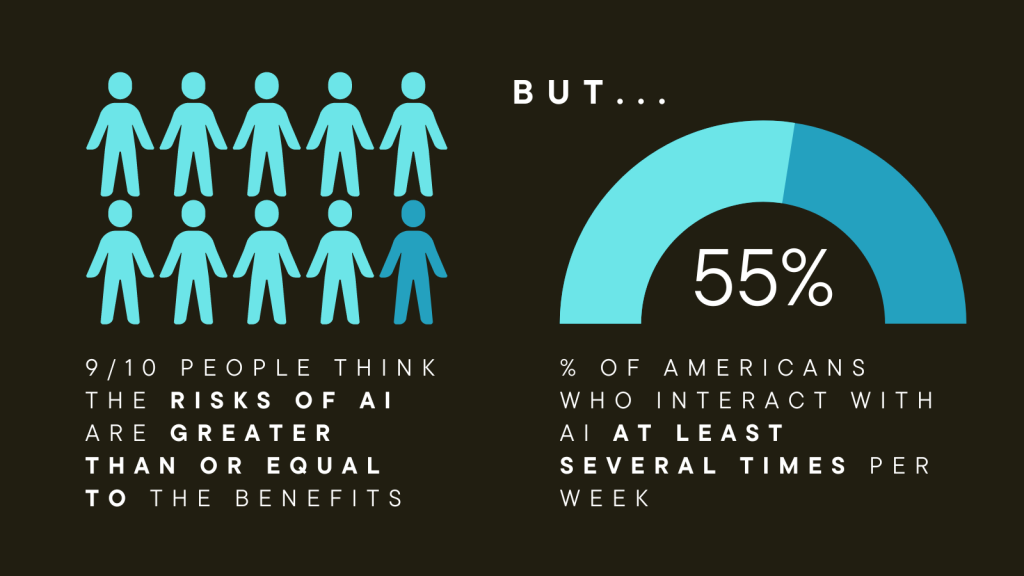

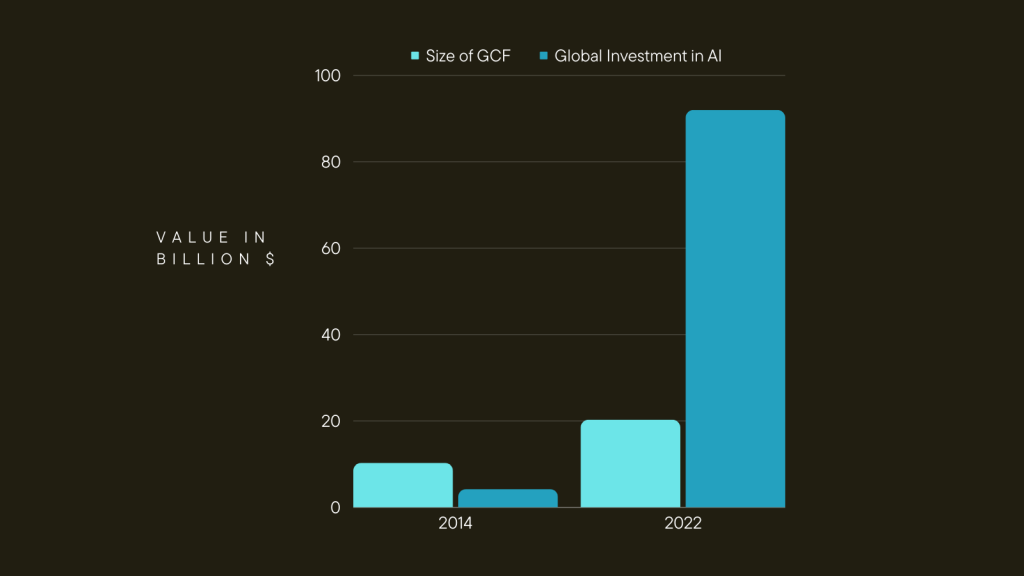

And the data points to this one coming true, at least monetarily. The biggest investment portfolio in climate change, GCF, is worth $13.5B. Compare that to AI, where startups in 2023 alone secured $23B in investments. And Pew Research Center says public concern is about even (54% and 52%, respectively.)

4 – It’s a biotech vs AI future

Also from his 2012 lecture, Thiel compared a biotech and AI vision of the future.

“A biotech future would involve people functioning better, but still in a recognizably human way. But AI has the possibility of being radically different and radically strange.”

He describes the invisible barriers of biotech, like the limits of telomerase (which helps unbounded cell division.) Basically, there are things we hope can be cured or prevented with biotech, but no guarantee — whereas an AI future could remove these problems, but in a way that starts to look less “recognizably human.”

How does this proposed binary hold up?

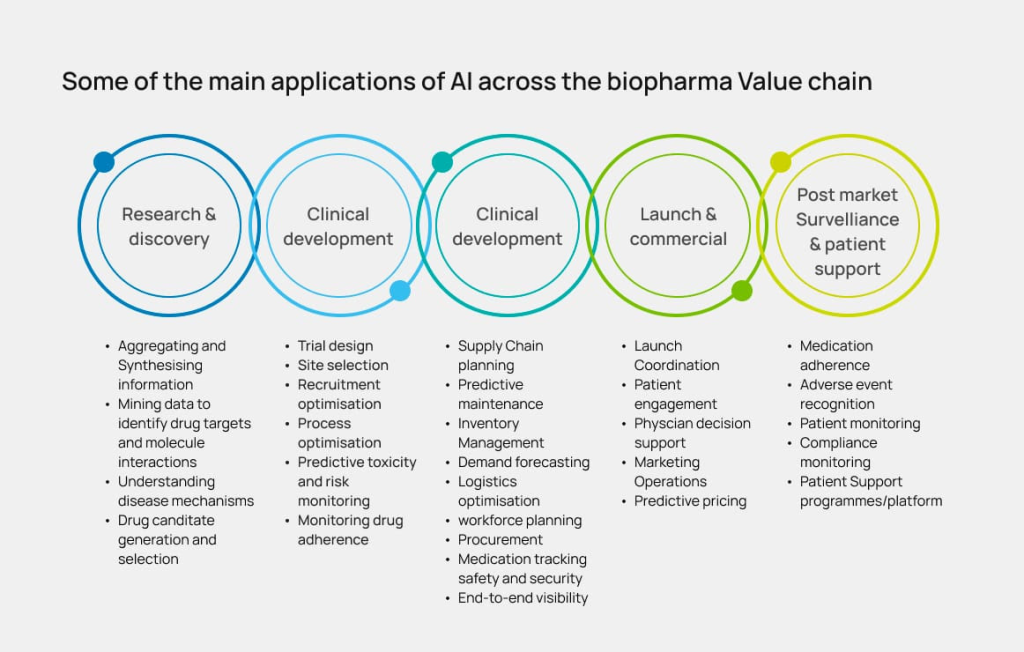

Well, for now, biotech and AI seem to be coexisting just fine. Interest in both has soared in recent years – and there’s plenty of crossover. Companies like BenevolentAI and Google’s Isomorphic Labs are thriving under new AI breakthroughs.

But I think the question of how “recognizably human” our future will be is still a relevant one – just probably for a few decades down the road.

5 – How to invest in “The Singularity”

I wanted to end with this one because it’s the most actionable, and I thought it’d be really interesting to see if the advice holds up at all. It’s also the deepest cut, going all the way back to 2007 – when Clarium Capital was still kicking and AI was often referred to rather cryptically as “The Singularity”.

“The singularity will either be really successful, in which [case] we’re going to have the biggest boom ever, or it is probably going to blow up the whole world” – financially speaking.

Thiel claimed that the bad version of “the singularity” is impossible to financially protect against. Even if you invest everything in gold, he said, “probably some humans or robots or something else will have come along and taken your gold away from you.”

By that logic, the only thing you can do is invest in the good version of an AI world.

And not only that, but Thiel said these investments had already begun – starting in the 70s. Thiel claimed that recent economic booms have represented different bets on the singularity, or at least on proxies for it, like globalization.

His “macro thesis” was that though many of these bets would be wrong, “one of them is going to be real … or the world is going to come to an end.”

To me, this advice holds up amazingly. As AI becomes more and more entwined with our daily lives, betting against it is just about the worst decision you could make.

Because even if you think it will all spectacularly self-implode one day, that’s not something any investment can save you from.

Merryphofficialwebsite is my go-to! It’s super easy to navigate and always has the games I’m looking for. Check it out! merryphofficialwebsite

Alright fellas, gamebetcom seems to be buzzing with activity. Anyone got any inside scoop? Seriously though, any opinions on gamebetcom before I dive in?

RS786gamedownload comes through with its new games. It’s great to get a new dose of fun! Fast downloads and good variety, what’s not to like? Time to have some fun. If you are ready to try it out, get it here: rs786gamedownload