The journey so far, plus 5 startups to watch

In a recent study, 66% of people could give at least a partial explanation of what AI is, in large part thanks to the popularity of OpenAI’s ChatGPT. But one downside of this latest AI boom is its focus on Large Language Models (LLMs), often at the expense of other avenues of research.

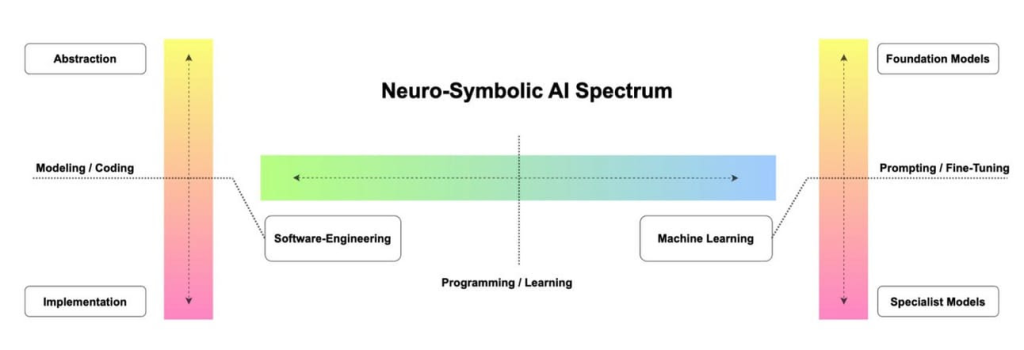

Neuro-symbolic AI combines neural nets, including LLMs, with symbolic systems like knowledge graphs to produce more reliable and transparent reasoning.

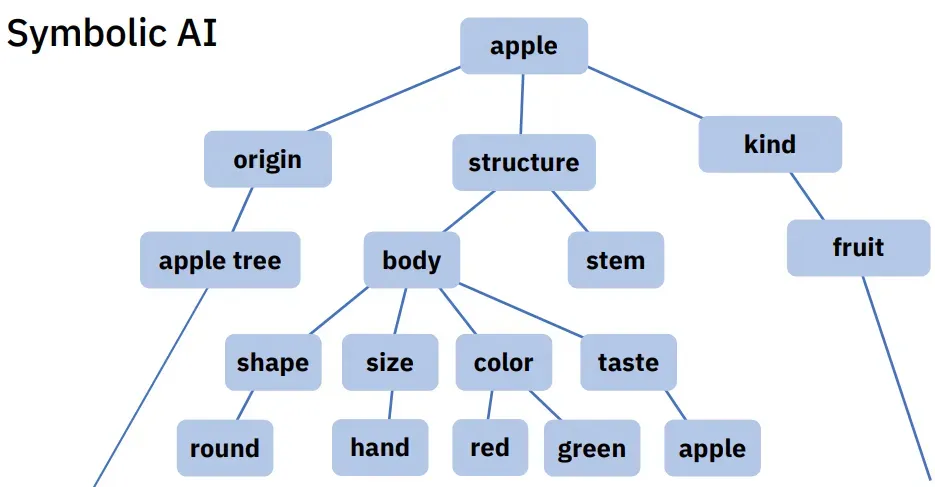

Symbolic AI is based on the idea that humans navigate the world by creating internal symbolic systems and logical rules for dealing with these symbols. It’s also the oldest model for AI, though it’s ‘fallen out of fashion’ in recent years, in part due to the surge in commercial prospects for LLMs.

Let’s backtrack a little…

Here’s a timeline of the development of artificial intelligence, from its inception in the 1950s to the rebirth of Symbolic AI in the 2010s.

1950s: AI was born in the mid-1950s with Symbolic AI, an effort to reproduce the neural patterns of the human brain within a machine.

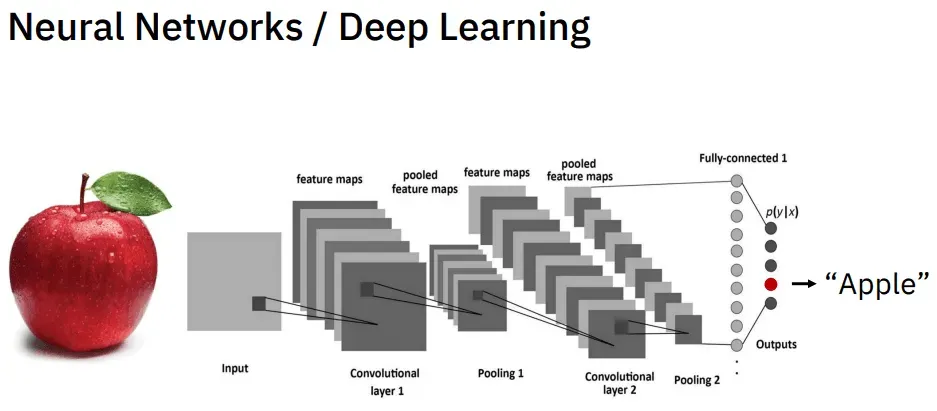

1960s: By the 60s we had deep learning, the basis of modern LLMs. Though a non-learning recurrent neural network, the Ising model, was first built in the 1920s, multi-layer perception was not conceptualized until 1962. The first multi-layered deep learning model was built in 1967 by Soviet mathematician Alexey Ivakhnenko.

1980s: The 80s saw a direct challenge to Symbolic AI with a data-fuelled program that could learn to pronounce words in the same way that babies do.

1990s: Machine learning (ML) and deep learning developed throughout the 90s and 2000s, and Symbolic AI took a backseat.

2010s: Symbolic AI saw a resurgence in the 2010s with IBM’s Project Debater, which combined symbolic AI with probabilistic inference. It outwitted two (human) Jeopardy contestants & even trash-talked!

So AI is smart — but how do we make it sensible?

Researchers like Dan Gutfreund became convinced that the key to truly intelligent — even conscious — AI was common sense. It led him to a very specific avenue of research: babies

Recent studies of infant neural activity suggest that humans are actually born with an approximate understanding of the world already pre-programmed in our brains From there, we learn from data how to navigate the real situations we find ourselves in.

One way that researchers been able to recreate a child’s brain in an AI model is regarding object permanence. Through a combination of deep neural nets, symbolic AI & a probabilistic physics inference model, it learned to tell if an object should be visible after an obstacle was removed.

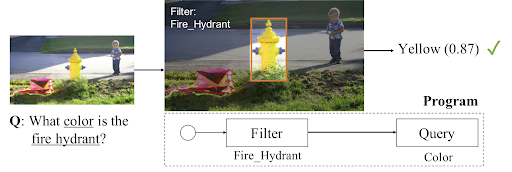

Another project, the Neuro-Symbolic Concept Learner, is being taught colors in the same manner that children learn to identify them. To begin with, the model didn’t know the concept of color, let alone the names of individual colors.

But now it can identify color with minimal prompting — it’s learned to perceive them.

What about LLMs?

Neuro-symbolic AI takes the best parts of LLMs and mitigates some of its flaws, like hallucination and computation costs.

It creates more transparent, trustworthy AI that’s better able to reason and generalize concepts.

What about math?

Google DeepMind is leading the charge on neuro-symbolic models for math.

The model, AlphaGeometry, working with DeepMind’s other model AlphaProof, is the first to reach silver-medal standard at the International Mathematical Olympiad (and were just 2 points off gold).

But it’s not just giants like Google and IBM that are leading the way in neuro-symbolic AI.

Here are 5 of my favorite neuro-symbolic AI startups from around the world that you should be paying attention to.

- Symbolica

By building “Reasoning Machines”, Symbolica want to create AI that is “controllable, interpretable, reliable, and secure.”

A combination of structured cognition & symbolic reasoning models “the multi-scale generative processes used by human experts.”

Check them out here.

- Extensity AI

Extensity is all about creating “AI-first workflows.”

They’ve pioneered a Symbolic API that bridges the gap between classical programming & differentiable programming.

Check them out here.

- AnotherBrain

Taking a completely different approach is AnotherBrain’s Organic AI.

While deep learning was based on the brain’s neural pattern, Organic AI is macroscopic, “where large neuronal populations have a dedicated function. Ex: orientation and curvature detection.”

Check them out here.

- UMNAI

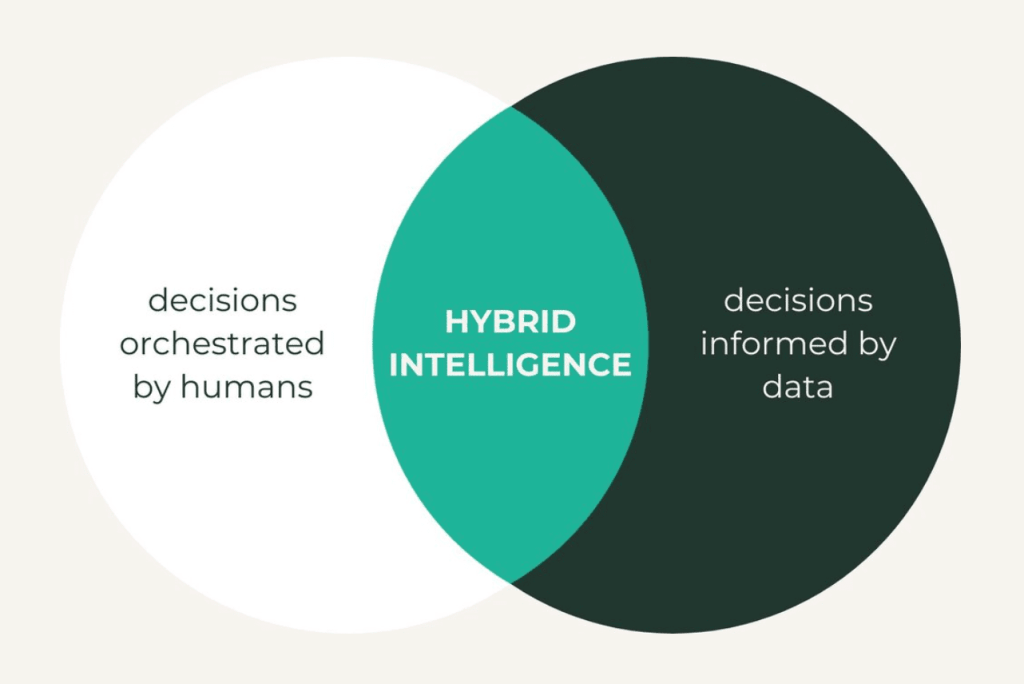

UMNAI’s XNNs are “transparent hyper-graph neural models organized and orchestrated symbolically, integrating the power of neural nets with the observability of symbolic logic.”

It tells you exactly how it reached its conclusion so you can check its logic.

Check them out here.

- Synfini

Combining neuro-symbolic AI with robotic automation, Synfini is speeding up drug discovery.

They’re combining “physical and virtual chemistry” for the “benefit of human health and longevity.”

Check them out here.

That’s a great point about strategic thinking in games! It’s cool how accessible classics like online solitaire are now – instant play on any device is a game changer. Really opens it up for quick breaks!

Okay, casibom843… I’m liking it. The interface is clean, and I found some games I haven’t seen anywhere else. Solid choice if you’re looking for something new.

Hey guys! Saw f8betceo around so I wanted to give them my thoughts. The site design is easy to get the grasp of and the support did answer quickly. That is about it from me f8betceo.

Yo! MX37MX, eh? Quick look-see, pretty standard stuff. Slots, table games, the usual. If that’s your bag, could be fun. Check ’em out and tell me what you think! mx37mx